The State of the Art

Data lives in many different places. Some of this could live in Apache Kafka for example, while other bits of important related data could be sourced from server-sent events on a server somewhere. Maybe even some of your data lives in a text file that you need to stream in from.

Let’s quickly emulate the state of the art and see what it’s like to retrieve some data from Kafka.

Here is a docker-compose.yaml file that we can use to stand up Kafka:

version: '2'

services:

zookeeper:

image: confluentinc/cp-zookeeper:latest

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

ports:

- "2181:2181"

kafka:

image: confluentinc/cp-kafka:latest

ports:

- "9092:9092"

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9092

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

depends_on:

- zookeeper

Running docker-compose up -d to stand up Kafka, we can now test and interact with it using a few bash commands. Let’s publish some data to our Kafka.

First, let’s create a topic:

docker exec -it kafka-kafka-1 kafka-topics \

--create \

--bootstrap-server localhost:9092 \

--replication-factor 1 \

--partitions 1 \

--topic my-family

This command creates the my-family topic. Let’s fill it in with some members of my family as an example.

We can use the following bash command to begin publishing to the topic:

docker exec -it kafka-kafka-1 kafka-console-producer \

--topic my-family \

--bootstrap-server localhost:9092

And we can use this command to subscribe to the topic, and watch as data streams into Kafka:

docker exec -it kafka-kafka-1 kafka-console-consumer \

--topic my-family \

--from-beginning \

--bootstrap-server localhost:9092

Here’s what it looks like after inputting 7 different members of my family:

In the above example, I submitted 7 strings of data, and I can see each being emitted from Kafka in my subscriber terminal.

In order to work with this data though, we should work at a higher level of abstraction than the command line interface. We can’t transform the data very easily here. To build a data pipeline, we’ll need to harness a programming language so we can work this logic in.

Let’s create a Scala service that prints the strings coming in from our my-family topic, similar to what the kafka-console-consumer was doing.

import scala.concurrent.ExecutionContext

import org.apache.pekko.actor.ActorSystem

import org.apache.pekko.kafka.scaladsl.Consumer

import org.apache.pekko.kafka.{ConsumerSettings, Subscriptions}

import org.apache.pekko.stream.scaladsl.Sink

import org.apache.kafka.clients.consumer.ConsumerConfig

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.kafka.clients.consumer.ConsumerRecord

import org.apache.pekko.Done

import org.apache.pekko.stream.scaladsl.Source

import scala.concurrent.Future

object KafkaTestMain extends App {

implicit val actorSystem: ActorSystem = ActorSystem()

implicit val ec: ExecutionContext = actorSystem.dispatcher

val bootstrapServers = "localhost:9092"

val topic = "my-family"

val consumerSettings: ConsumerSettings[String, String] =

ConsumerSettings(actorSystem, new StringDeserializer, new StringDeserializer)

.withBootstrapServers(bootstrapServers)

.withGroupId("group1")

.withProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest")

val source: Source[ConsumerRecord[String, String], Consumer.Control] =

Consumer.plainSource(consumerSettings, Subscriptions.topics(topic))

val done: Future[Done] = source

.map(record => record.value())

.runWith(Sink.foreach(println))

done.onComplete { case _ =>

actorSystem.terminate()

}

}

This small Scala application subscribes to our Kafka my-family topic and just prints the strings emitted.

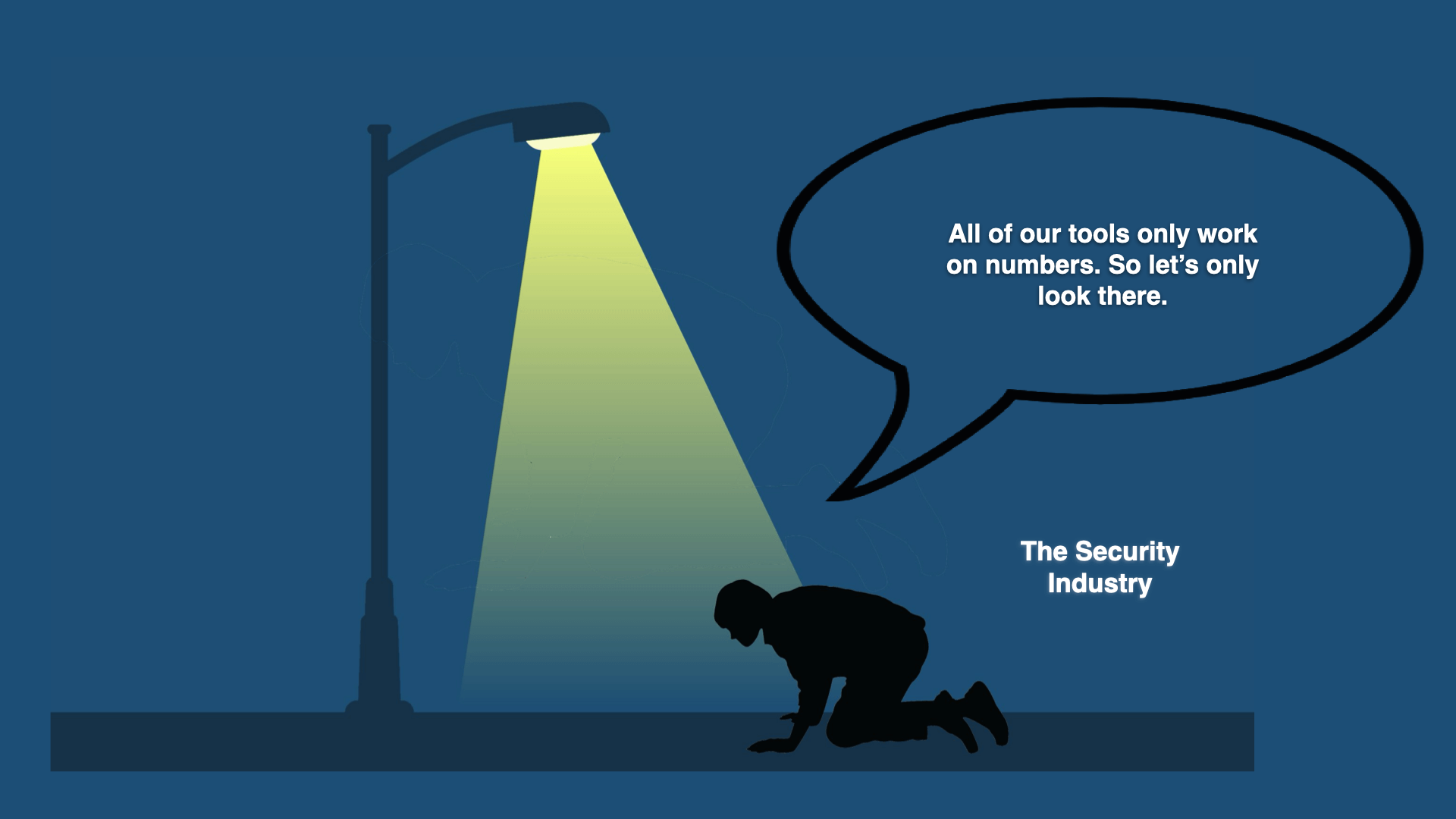

This small example shows that we can create bespoke software that subscribes to data streams, and works with the emitted data. But like I mentioned at the beginning of this post, our data can live in many different locations. If my use-case demanded it, I would need to write up more logic to stream in data from many different sources. The above example is the initial step. But many more steps are required to make even data from this single source ready for production.

Scalability, Resilience, Maintainability

Because we are streaming data in, and not batch processing our data, our service must be up 24/7. This requirement means the following:

- The microservice must be able to scale with the amount of data being ingested, tackling challenges like service discovery, network latency, and load balancing.

- It must be resilient, capable of handling network outages, able to self-heal if a fault occurs, and able to restore from timely backups.

- It must be able to reign in complexity, and allow developers to add new features based on shareholder needs, with a careful eye on dependency management and rich documentation.

Applying all of this to our example of streaming data in from a single Kafka topic would require performing best-practices when implementing event-driven architecture patterns. Think techniques like event-sourcing, the saga pattern, and CQRS (Command Query Response Segregation).

Applying proven patterns to our service will result in a scalable, resilient, and maintainable piece of software, but doing this repeatedly for every additional source of data is repetitive, and prone to errors.

What we need is a new state of the art, allowing us to ingest data from event sources in a scalable, resilient, and easily maintainable way.

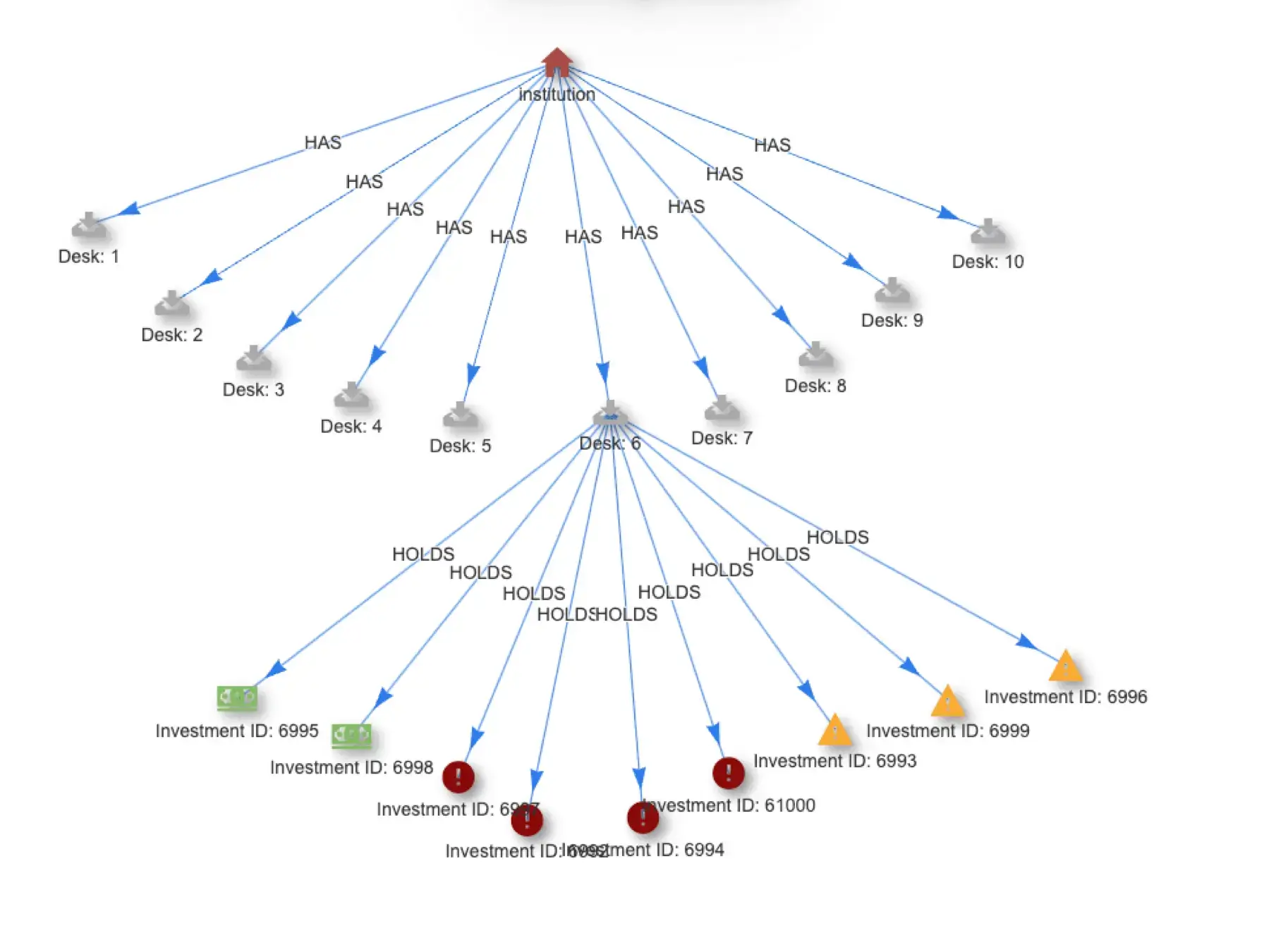

Enter thatDot Streaming Graph, the New State of the Art

Let’s do the exact same thing as above, but using thatDot Streaming Graph, the world’s first streaming graph data processor. We’ll point Streaming Graph at our Kafka, ingest data from our my-family topic, and view our results. This time though, we won’t need to write any bespoke programs to handle how to ingest data.

First, grab your own copy of Quine (the open source version ofthatDot Streaming Graph) by clicking here and downloading the JAR file. As of this blog post, I’m downloading v1.6.2 of Quine.

To start it run the following command:

java -Dquine.store.type=in-memory -jar Downloads/quine-1.6.2.jar

This will start Quine with an in-memory persistor, meaning it will not save any data on disk. This is great for testing, since it doesn’t leave anything on disk that has to be cleaned up later. Navigate to http://127.0.0.1:8080/ and you’ll see the Quine Exploration UI.

The docs are interactive, so you can enter the code directly on the page that explains how it works.Quine can ingest data from multiple different sources, simultaneously. Just like in the previous example, let’s create an Ingest Stream, populating our graph with data from Kafka

We’ll use the following JSON to create an ingest stream from the my-family topic.

{

"type": "KafkaIngest",

"format": {

"type": "CypherRaw",

"query": "WITH text.utf8Decode($that) as name MATCH (n) WHERE id(n) = idFrom(name) SET n:Person, n.name=name"

},

"topics": ["my-family"],

"autoOffsetReset": "earliest",

"bootstrapServers": "localhost:9092"

}

What does this JSON code do? First, we instruct Quine to create an Ingest Stream of type “KafkaIngest” Subscribe to the my-family topic, and use the autoOffsetRest option of earliest to begin reading data from the beginning of the topic. Use a Cypher query to receive the raw bytes of data coming in from kafka, casting those bytes as UTF8 strings.Then create person nodes on the graph for each name in the incoming data stream.

Pasting in that JSON, giving the Ingest Stream a name (in this case family-ingest-stream), and then clicking Send API Request, should result in a 200 return code, meaning we successfully created an ingest stream.

If we list the Ingest Streams, we can see our named ingest, along with the ingestedCount of 7 records.

Returning to the Exploration UI, enter the basic MATCH (n) RETURN n cypher query to see the results of our ingest.

We didn’t have to write up a custom microservice to load in data from this source. We just pointed Quine at our source, gave it a few parameters, and told it to start the ingest, transforming our data using Cypher.

If we wanted to consume data from a file, we would similarly instruct Quine to ingest data from a file, and pass in a Cypher query to transform the data.

Enhancing the Robustness of the Streaming Graph in Production Environments

Much like our custom program designed for microservices, for production, it’s imperative to focus on scalability, resilience, and maintainability.

Optimizing for Scalability

thatDot Streaming Graph, the commercial version of Quine, is engineered for horizontal expansion.

Another pivotal feature is its implementation of backpressure, which dynamically regulates data processing speeds in alignment with the consuming service’s capacity. This ensures Streaming Graph avoids overwhelming downstream services, no matter how high the system scales. No need for us to do anything to make that happen.

Ensuring Resilience

Streaming Graph’s resilience is significantly bolstered by the backpressure mechanisms, safeguarding against data loss during unexpected downtimes by adjusting the data flow based on the consumer’s current state. If the consumer can’t consume for a while, Streaming Graph pauses until it is ready.

Streaming Graph is also designed to be self-healing. In the event of a cluster member failure, the system automatically delegates a hot-spare to jump in and assume the role of the downed member, ensuring uninterrupted data processing. This resilience is further enhanced by leveraging durable data storage solutions such as Cassandra or ClickHouse.

Maximizing Maintainability

thatDot Streaming Graph has the ability to ingest data from multiple sources, simultaneously, removing the need to create multiple bespoke services. You can ingest data from:

- Apache Kafka

- AWS Kinesis

- S3

- Server Sent Events (SSE)

- Websockets

- And more

This also provides extra functionality, since you can resolve duplicates, and find relationships between multiple data streams in real time.

Streaming Graph and Quine use the Cypher query language to transform all data sources, allowing a consistent experience when ingesting data sourced from different locations.

Elevating Your Data Architecture: Discover the Power of thatDot Streaming Graph

In software development, when complexity starts getting too high, refactoring to use a higher-level abstraction can be a winning strategy. The current state of the art with microservices and data pipelines is incredibly complex, demanding a higher level of abstraction. thatDot Streaming Graph is that higher level of abstraction. It can handle the problems of scalability and resilience automatically, so developers can focus on the logic that matters, transforming high-volume data into high-value insight.

Click here to download your own open source copy of Quine, and click here to jump into our Discord to join other like-minded developers looking to solve the challenges of high-volume data pipelines at scale.