Quine 1.4.0: Scale, Stability, Supernode Mitigation

A Major Release Fast on the Heels of A Major Milestone

Today marks the release of Quine 1.4.0 with significant improvements made to resource utilization and developer experience. This release impacts both the open source and enterprise versions of Quine and is not backwards compatible with previous versions.

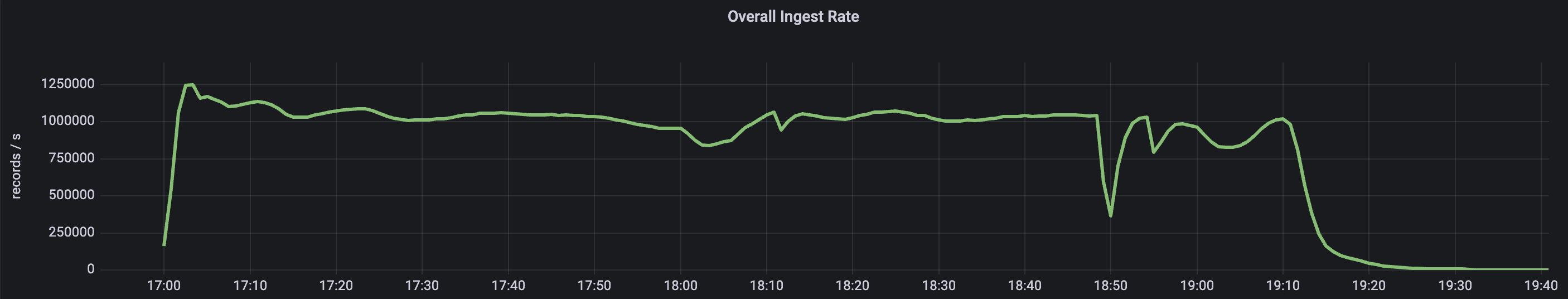

The development of Quine 1.4.0 and our recent landmark achievement in which Quine Enterprise processed one million graph events per second are deeply intertwined events. We ran those tests using an early 1.4.0 release candidate and we incorporated learning and bug fixes from those tests into the final released version.

Quine 1.4.0 contains much foundational work for the next leap forward in terms of performance and stability.

Highlights of Changes impacting both Quine Community and Quine Enterprise

(Full release notes for Quine 1.4.0)

Domain Graph Nodes (DGNs) – sometimes the biggest impact feature isn’t very glamorous but one that sets the table for more ambitious and high visibility improvements. Such is the case with DGNs, which not only contributes immediate performance and resource utilization improvements but lays the foundation for coming breakthroughs in supernode mitigation. (Supernodes, the bane of graph data models, are nodes with too many edges, which impacts memory usage and performance.)

With the cluster of PRs associated with DGN, we rewrote the serialization, persistence, and message passing system used by DistinctId standing queries. Instead of using substantial memory on every node that stores a component of a relevant standing query, these partial query objects are stored in a new top-level persistent entity called DomainGraphNode.

Impact: reduces memory footprint, prepares the way for supernode mitigation, does not support graphs created in Quine 1.3.2 or earlier.

Evolution of supernodes during ingest visualized in Quine’s Exploration UI.

Documentation Improvements – as part of our never-ending quest to make docs.quine.io as easy and clear to use as possible, we made significant improvements throughout, but most notably for:

- Getting Started – reorganized and simplified all elements of onboarding Quine and Quine basics

- Core Concepts – added Streaming Systems with details on Apache Kafka and Kinesis

- Components – expanded on the properties of components, and most importantly on the idFrom function.

- Recipe Documentation – expanded and reorganized across docs site.

Impact: better organization and simpler explanations of some of Quine’s notable core concepts.

Usability and performance improvements to reify.time – With Quine 1.4.0, the reify.time function now yields only the finest-granularity reified period node whereas reify.time previously returned an array with all time nodes.

With this adjustment, reify.time makes it easier to write well-behaved queries that would otherwise tend to turn large period time nodes into supernodes. For example, the time node for “2022” would tend to become a supernode. That is avoided by changing reify.time such that the Cypher query calling it does not inadvertently operate on every period in the hierarchy, and instead, only operates on the time node for the smallest period.

Existing Cypher scripts will be syntactically compatible. However, the behavior will change, specifically impacting the YIELD clause following CALL reify.time. Previous behavior was that the YIELD clause would emit every time node in the period hierarchy created by reify.time. The new behavior is that the YIELD block will emit only for a single time node.

Impact: better query ergonomics, reduces likelihood a supernode is created, does not support reify.time queries created for Quine 1.3.2 or earlier.

Added text.urlencode and text.urldecode Cypher functions – These handy functions are especially useful for standing query results. They perform URL encoding or decoding of a string used as an HTTP POST body or URL component. This is particularly useful to create the #-string query linking to a specific view in the Quine Exploration UI.

Impact: standing query outputs are now more readily usable in other, downstream applications and for demos.

Added atomic “count” return value to incrementCounter procedure – The core functionality of incrementCounter takes advantage of Quine’s unique computational model to keep a perfectly-synchronized counter on a single node. The old VOID version (i.e., returning nothing) ensured the counter was updated correctly but provided no way to access the uniquely incremented counter value.

We added a “count” yielded value to the procedure that can be used via the standard syntax for Cypher procedures: https://s3.amazonaws.com/artifacts.opencypher.org/openCypher9.pdf, page 122). For example, CALL incrementCounter(myNode, "counter") YIELD count AS updatedCount will increment a property named “counter” on the node referred to as myNode, then add a variable to the query context called updatedCount, containing the new value of that property.

Of particular note is that multiple query executions running in parallel will get unique values returned from the procedure’s yielded “count”.

An example of how to use the new version

MATCH (n) WHERE id(n) = idFrom(-1) CALL incrementCounter(n, 'prop') YIELD count RETURN id(n), count

This increments the “prop” counter on the node with id idFrom(-1) and returns the count. Multiple invocations of this query, even in parallel, will all return a unique count value.

Impact: counters are more flexible, and can be read under high parallelism.

Standing queries can now use idFrom – Previously, ingest and ad hoc queries could use idFrom, Quine’s hash-based high-performance alternative to indices used for node lookup and retrieval. Standing queries can now use `idFrom`-based ID constraints, provided that all arguments to the `idFrom` are literal values.

An example of a standing query using idFrom:

MATCH (n) WHERE id(n) = idFrom('my', 'special', 1, 'node') RETURN DISTINCT id(n) AS specialNodeId will match on exactly 1 node: the node with the id idFrom('my', 'special', 1, 'node').

Impact: brings standing queries, whether using MultipleValues or DistinctId, closer to the full range of functionality available in ad-hoc queries.

Added support for decoding steps during ingest (base64, zlib, and gzip) – This one is pretty simple and self-explanatory, but we draw your attention to it as it means you can ingest compressed event data from Kafka and Kinesis.

Impact: better performance, supports gzip, zlib, and base64 input compression

Enterprise-focused Improvements

Improvements to Quine Enterprise focused on improved cluster management and querying cluster state.

Added Cypher function clusterPosition() to get the executing member’s position – You can now get the index of the executing cluster member. When combined with locIdFrom(clusterPosition(), "prop1", "prop2"), the node will have a unique hash on the current host

Cluster-position aware locIdFrom function – The locIdFrom function now accepts Quine cluster position integers as its first argument rather than a partition. To map a partition to a position integer, the new kafkaHash function may be used. For example, locIdFrom(kafkaHash(“india”), “West Bengal”, 12345). Note: ‘QuineIds’ allocated by Quine 1.3.2 and earlier may have inconsistent mappings in Quine 1.4.0 and later.

Extended support for bloom filter-optimized persistence to clusters – Previously Quine used a bloom filter to help decide if a node already exists. When unsure, Quine would have to query the persister. The bloom filter was disabled for Quine Enterprise because in the case of a cluster, when a hot spare joined the cluster, it would have to rebuild its bloom filter (taking minutes), thus causing the cluster performance to degrade while waiting for the host to join. This change allows a host to join the cluster, build its bloom filter in the background while always hitting the persister early on. Once the bloom filter is loaded, then the optimization can be utilized. It effectively allows a host to join fast, keep the cluster healthy, and the cost is that the new host will be a bit slower until the bloom filter is available.

Improvements resulting from One Million Events/Second testing

If you are interested in bug fixes and improvements yielded while processing high volume event streams with Quine, here’s a quick list pulled from release notes:

- Enriched logging in edge cases involving shard resolution

- Simplified node wakeup protocol: edge cases involving simultaneous request to sleep and wake should now be more efficient

- Cassandra persister batched writes now respect configured timeout and consistency options

- Singleton-snapshot and journals may now be enabled at the same time

- Improved shutdown behavior in failsafe case

- Node edge and property counts will now be correctly reflected in the metrics dashboard

- Enterprise: Improved cluster stability when cluster members experience temporary disconnections

Getting Started

If you want to try Quine using your own data, here are some resources to help:

- Download Quine 1.4.0 – JAR file (263MB)| Docker Image | Github

- Start learning about Quine now by visiting the Quine open source project.

- Check out the Ingest Data into Quine blog series covering everything from ingest from Kafka to ingesting .CSV data

- CDN Cache Efficiency Recipe – this recipe provides more ingest pattern examples

And if you require 24 x7 support or have a high-volume use case and would like to try the Quine Enterprise, please contact us. You can also read more about Streaming Graph here.

—————————-

Header image: Photo by Nina Luong on Unsplash

Additional image: Photo by Ricardo Gomez Angel on Unsplash

Related posts

-

The Secret Ingredient in the Alphabet Soup of Cybersecurity

This is the first in a series of blogs exploring how the Quine Streaming Graph analytics engine is the secret ingredient in the Alphabet Soup of cybersecurity, enabling…

-

Stream Processing World Meets Streaming Graph at Current 2024

The thatDot team had a great time last week at Confluent’s big conference, Current 2024. We talked to a lot of folks about the power of Streaming Graph,…

-

Streaming Graph Get Started

It’s been said that graphs are everywhere. Graph-based data models provide a flexible and intuitive way to represent complex relationships and interconnectedness in data. They are particularly well-suited…