Adding Quine to the Insider Threat Detection Proof of Concept

A lot has changed since we first posted the Stop Insider Threats With Automated Behavioral Anomaly Detection blog post. Most significantly, thatDot released Quine, our streaming graph, as an open source project just as the industry is recognizing the value of real-time ETL and complex event processing in service of business requirements. This is especially true in finance and cybersecurity, where minutes (seconds or even milliseconds) can mean the difference between disaster, survival or success.

Our goal, at the time, was to show how anomaly detection on categorical data could be used to resolve complex challenges utilizing an industry recognized standard benchmark dataset, which happened to be static. The approach we used then was to pre-process (batch) the VAST Insider Threat challenge dataset with Python then ingest that processed stream of data with thatDot’s Novelty Detector to identity the bad actor.

But with a new tool in our kit we decided to see what would be involved in updating the workflow by replacing the Python pre-processing, instead using Quine in front of Novelty Detector in our pipeline.

This involved:

- Defining the ingest queries required to consume and shape the VAST datasets; and

- Developing a standing query to output the data to Novelty Detector for anomaly detection.

Data from the dataset is broken into three files:

- Employee to office and source IP address mapping in employeeData.csv

ingestStreams:

- type: FileIngest

path: employeeData.csv

parallelism: 61

format:

type: CypherCsv

headers: true

query: >-

MATCH (employee), (ipAddress), (office)

WHERE id(employee) = idFrom("employee", $that.EmployeeID)

AND id(ipAddress) = idFrom("ipAddress",$that.IP)

AND id(office) = idFrom("office",$that.Office)

SET employee.id = $that.EmployeeID,

employee:employee

SET ipAddress.ip = $that.IP,

ipAddress:ipAddress

SET office.office = $that.Office,

office:office

CREATE (ipAddress)<-[:USES_IP]-(employee)-[:SHARES_OFFICE]->(office)

- Proximity reader data from door badge scanners in proxLog.csv

- type: FileIngest

path: proxLog.csv

format:

type: CypherCsv

headers: true

query: >-

MATCH (employee), (badgeStatus)

WHERE id(employee) = idFrom("employee", $that.ID)

AND id(badgeStatus) = idFrom("badgeStatus",$that.ID,$that.Datetime,$that.Type,$that.ID)

SET employee.id = $that.ID,

employee:employee

SET badgeStatus.type = $that.Type,

badgeStatus.employee = $that.ID,

badgeStatus.datetime = $that.Datetime,

badgeStatus:badgeStatus

CREATE (employee)-[:BADGED]->(badgeStatus)

- Network traffic in IPLog3.5.csv

- type: FileIngest

path: IPLog3.5.csv

format:

type: CypherCsv

headers: true

query: >-

MATCH (ipAddress), (request)

WHERE id(ipAddress) = idFrom("ipAddress",$that.SourceIP)

AND id (request) = idFrom("request", $that.SourceIP,$that.AccessTime, $that.DestIP, $that.Socket)

SET request.reqSize = $that.ReqSize,

request.respSize = $that.RespSize,

request.datetime = $that.AccessTime,

request.dst = $that.DestIP,

request.dstport = $that.Socket,

request:request

SET ipAddress.ip = $that.SourceIP,

ipAddress:ipAddress

CREATE (ipAddress)-[:MADE_REQUEST]->(request)

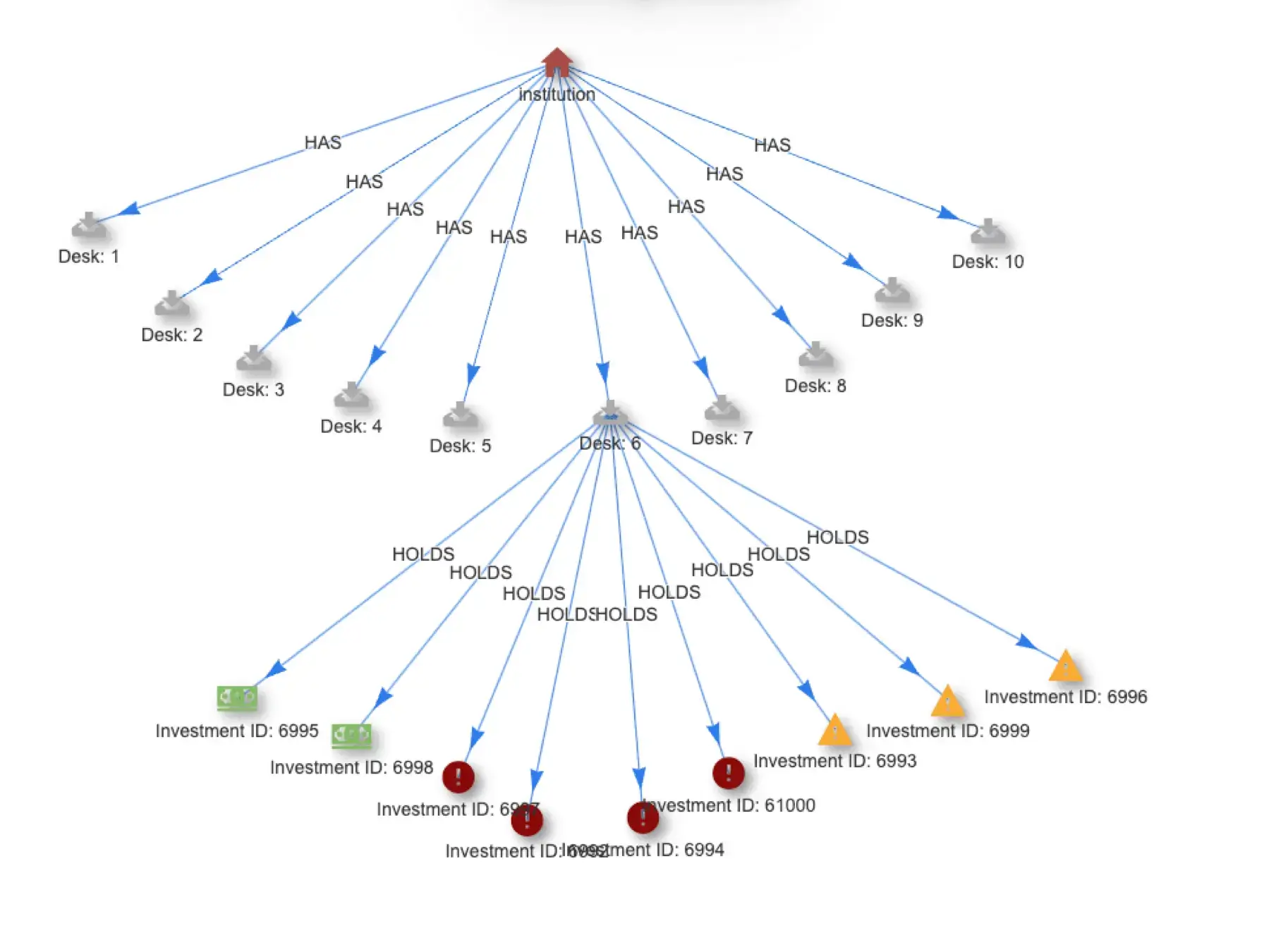

These ingests form a basic structure that looks like:

Because we have created an intuitive schema for identifying nodes by way of feeding idFrom() deterministic and descriptive data that can be used to query for them very efficiently (and do so with sub-millisecond latency).

A quick query efficiently displays relevant properties from connected nodes.

Moving from Batch to Real Time Monitoring

While this is certainly an improvement from our previous workflow, it is still highly manual (i.e., having to explicitly query for the data we’re looking for). The promise of a Quine to Novelty Detector workflow is automation with real-time results.

By ingesting the data in chronological order (as presented in the source files), we are able to easily match proximity network events to the last associated proximity badge event in real-time.

This is accomplished via standing query matches like:

standingQueries:

- pattern:

query: >-

MATCH (request)<-[:MADE_REQUEST]-(ipAddress)<-[:USES_IP]-(employee)-[:BADGED]->(badgeStatus)

RETURN DISTINCT id(request) AS requestid

type: Cypher

outputs:

print-output:

type: CypherQuery

query: >-

MATCH (request)<-[:MADE_REQUEST]-(ipAddress)<-[:USES_IP]-(employee)-[:BADGED]->(badgeStatus)

WHERE id(request) = $that.data.requestid

AND badgeStatus.datetime<=request.datetime

WITH max(badgeStatus.datetime) AS date, request, ipAddress

MATCH (request)<-[:MADE_REQUEST]-(ipAddress)<-[:USES_IP]-(employee)-[:BADGED]->(badgeStatus)

WHERE badgeStatus.datetime=date

RETURN badgeStatus.type AS status,ipAddress.ip AS src,request.dstport AS port,request.dst AS dst

The question remains, “How do we share the standing query matches from Quine to Novelty Detector?” This can be done in a number of ways (all via standing query outputs) including, but not limited to:

- Writing results to a file that Novelty Detector ingests;

- Emitting webhooks from Quine to Novelty Detector; or

- Publishing results to a Kafka topic to be ingested by Novelty Detector.

Although the first two choices will work, they are severely suboptimal. Consider a simple example of a single employee’s data:

Visualizing data from a single employee.

Writing the aggregate 115,434 matches would be done one record at a time (on each standing query match) to the filesystem.

andThen:

type: WriteToFile

path: behaviors.jsonl

Using webhooks suffer the same issue as writing to file, and introduces induced latency from the HTTP transactions.

andThen:

type: PostToEndpoint

url: http://localhost:8080/api/v1/novelty/behaviors/observe?transformation=behaviors

Ultimately, we settled on the third option as it most closely resembles production environments, and is the most performant.

andThen:

type: WriteToKafka

bootstrapServers: localhost:9092

topic: vast

format: {

type: JSON

}

The big question – did it work?

Results from the Novelty Detector UI.

Absolutely.

The anomalous activity has been identified.

Was it worthwhile?

Sure, but…

It Don’t Mean a Thing If It Ain’t Got That Real-Time Swing

Although we were able to accomplish the same results with Quine in a single step this was still a batch processing-based exercise. The true value of a Quine to Novelty Detector pipeline is in the melding of complex event stream processing in Quine with shallow learning (no training data) techniques in Novelty Detector, providing an efficient solution for detecting persistent threats and unwanted behaviors in your network. This pattern, moving from batch processing, requiring heavy lifting and grooming of datasets, to real-time stream processing is one where Quine and Novelty Detector thrive.

Try it Yourself

If you’d like to try the VAST test case yourself, you can run Novelty Detector on AWS with a generous free usage tier. Instructions for configuring Novelty Detector are available here.

And the open source version of Quine is available for download here. If you are interested there is also an enterprise version that offers clustering for horizontal scaling and resilience.

And if you’d prefer a demo or have additional questions, check out Quine community slack or send us an email.