The Challenges of Finding Fraud on the Blockchain

Blockchain-based technology growth has been explosive, with over 10,000 cryptocurrencies alone available to rapidly growing consumer and commercial user bases. Real-time governance and compliance techniques are needed to ensure confidence in the space in order for them to be embraced as alternatives to fiat currencies. The combination of new technology, well established user expectations for real-time transactions and rapidly evolving regulations demand new tools to handle the complexities of these distributed and pseudonymized systems.

Detecting, tracing, and mitigating fraud across block chain(s) relies on many of the same practices used by more traditional banking systems: modeling user behaviors, watching for suspicious transactions relative to known exploits or typical behavior patterns (often termed “know your customer” or KYC), and rapid action to limit fraudulent transactions (e.g., from hacked accounts), ideally in real-time. The use of pseudonymity practices (e.g., the use of private addresses), however, require new data analysis techniques and mechanisms to maximize the contextual value of the data that is available to identify fraud while minimizing the impact false positives and investigative overhead on customers and business operations.

Given that user identity data is more limited in crypto, it becomes necessary to maximize the use of available information about the interactions of accounts and wallets to identify and trace money laundering. Fortunately, cryptos underlying blockchain(s) are essentially append-only ledgers and provide a complete history of such interactions and can be readily analyzed. That is, so long tools suited to modeling and querying these relationships are available.

Relational Databases Work at Low Transaction Volumes

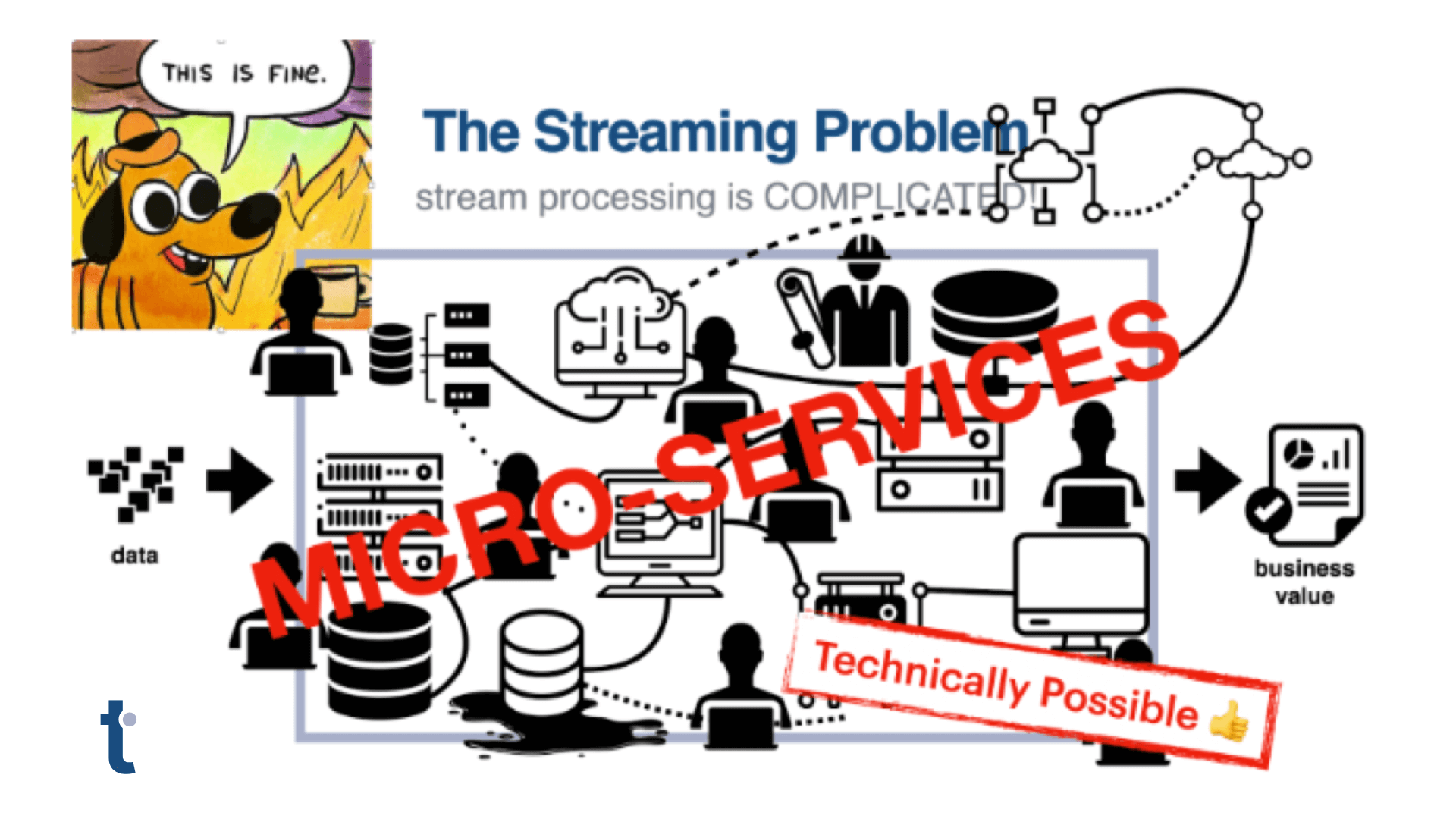

Modeling and monitoring relationships between event data can be done at low volume using legacy relational database tools. Tables are built to represent the relationships between an address and it’s transactions, which are joined with tables about the addresses and their accounts, which are then joined with tables about blocks, which are joined with tables from other blockchains… Such “nested joins” and the use of Foreign Keys to relate tables together are the state of the art today, but they are expensive computationally and slow to manifest query responses. Reducing queries to small blocks of time has been the modus operandi of the industry. However, the use of “time windowing,” a well-established practice, limits the data used to make decisions; the antithesis of the context enrichment we are seeking to analyze the relationships between blockchain event logs.

Using Categorical Data to Process the Blockchain

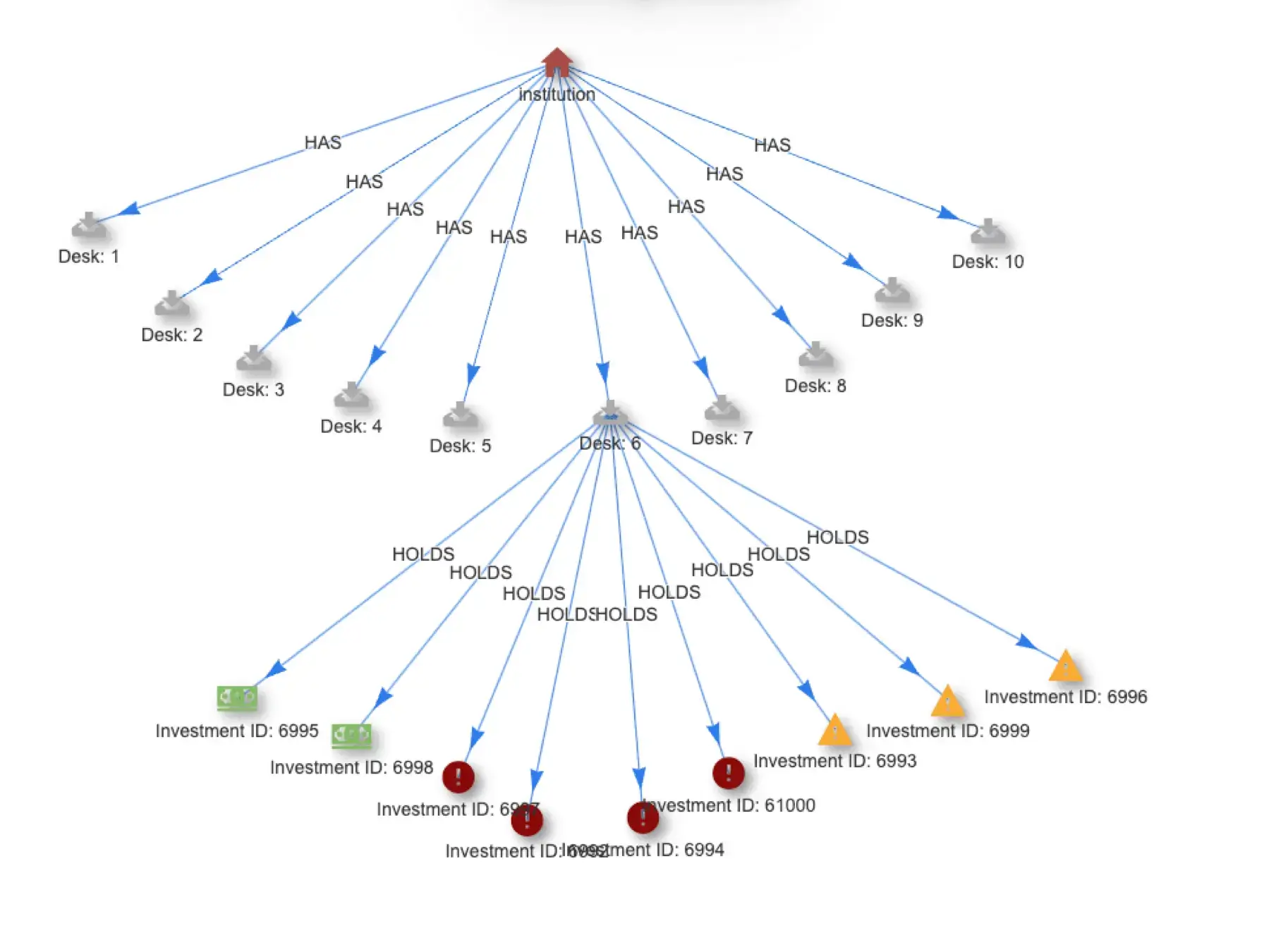

Enter graph technology. Graph data structures are ideal for modeling the relationships described in blockchain events . Flows of cryptocurrency between accounts and wallets are ideal inputs for graph data modeling. Accounts, addresses, time references, devices, assets, transaction details, etc. are all examples of categorical data connected by relationships and are therefore ideal to be represented as the nodes, edges, and properties provided in a graph data model. Most importantly, the graph data model makes the relationships between entities first class citizens in the data model so the costs and complexity associated with table joins is entirely eliminated.

Graph is the ideal data model for blockchain relationship tracing.

Knowing a graph data model is a good alignment with blockchain event data, we then need to confront the well-known performance limitations of graph databases. Graph databases are still databases, and queries that traverse multiple levels of relationship degrees dramatically impact database performance. Unfortunately, this leads developers to once again fall back to batch processing with time limited windows of data. While graph is more efficient than relational databases in modeling relationships, it still lacks the performance throughput needed for real-time fraud detection and mitigation use cases.

What is needed is a system that combines the graph data model with an event processing architecture that provides fast enough throughput to cost-effectively perform deep graph traversal queries across the complete history of one or more blockchain’s events. Achieving such performance maximizes the contextual value of available event data by looking at real-time and historical transactions, while acting fast enough to drive real-time transaction challenges to new ones.

Enter Quine Streaming Graph for Fraud Detection

This is where Quine streaming graph comes in. Quine is designed to process high volumes of event stream data in real time in order to detect complex and sometimes subtle patterns like the sort that might indicate fraud. Quine scales to tens of thousands of events per node, and can easily handle blockchain transaction volumes in real time, while also providing access to the complete trace of activities through and across blockchains. Quine can simultaneously consume both streaming data sources (Kafka and Kinesis) and static sources stored in databases and data lakes in order to build an integrated graph data model.

If you are interested in trying Quine yourself, download it here and try it with the Ethereum tag propagation recipe. If you have questions or want to check out the community, join Quine slack or visit our Github page.

Blog header photo credit: Photo by Héctor J. Rivas on Unsplash